Autonomous vehicles rely on more than just complex algorithms. At the heart of every breakthrough in perception, decision-making, and real-time control lies data. Not just any data, but clean, diverse, and well-annotated datasets that push the boundaries of what machines can understand about the road ahead.

In this article, we explore five of the most influential and respected autonomous driving datasets available today. These datasets have become the foundation for countless academic papers, open-source projects, and production-level systems, enabling advancements in object detection, 3D tracking, semantic segmentation, and motion forecasting.

Released by: Karlsruhe Institute of Technology & Toyota Technological Institute at Chicago

The KITTI dataset is widely regarded as the gold standard for early autonomous driving research. It introduced a comprehensive benchmark suite covering stereo vision, optical flow, visual odometry, 3D object detection, and tracking. Captured in real-world driving conditions using a vehicle equipped with high-resolution cameras, LiDAR, and GPS/IMU sensors, KITTI set a precedent for how self-driving datasets should be structured.

Despite being released over a decade ago, it remains relevant due to its clarity, well-defined evaluation protocols, and influence in the development of early neural network architectures for automotive perception.

Released by: Waymo (an Alphabet company)

Waymo opened its doors to the research community with a massive dataset capturing driving scenes from several U.S. cities. It includes synchronized high-resolution camera feeds, LiDAR point clouds, and detailed annotations for vehicles, pedestrians, and cyclists.

What sets the Waymo dataset apart is the quality and consistency of its labels. The multi-frame data allows researchers to study temporal behavior and motion forecasting in addition to static object detection. With variants tailored for 3D perception and behavior prediction, this dataset supports a wide range of cutting-edge work in autonomy.

Released by: Motional (formerly nuTonomy)

nuScenes was one of the first datasets to introduce a full 360-degree perception setup. It includes data from six cameras, five radars, and one LiDAR sensor, along with rich metadata for each scene, such as location, time of day, and weather conditions.

The dataset is designed for research into multi-sensor fusion, object detection, tracking, and scene understanding. It includes 1000 scenes with keyframe annotations and comes with an easy-to-use development kit. nuScenes stands out for its realism, diversity, and level of detail, making it a favorite in the autonomous research community.

Released by: Argo AI

Argoverse 2 builds on the learnings from its predecessor and introduces improved labeling quality, denser point clouds, and more complex urban driving scenarios. It provides synchronized camera and LiDAR data along with detailed HD maps that include lane-level semantics, traffic light locations, and geometry.

This dataset places strong emphasis on map-based reasoning, a critical component in motion forecasting and path planning. Researchers benefit from its alignment with real-world AV development needs, particularly in complex intersections and unstructured road scenarios.

Released by: Baidu's Apollo Project

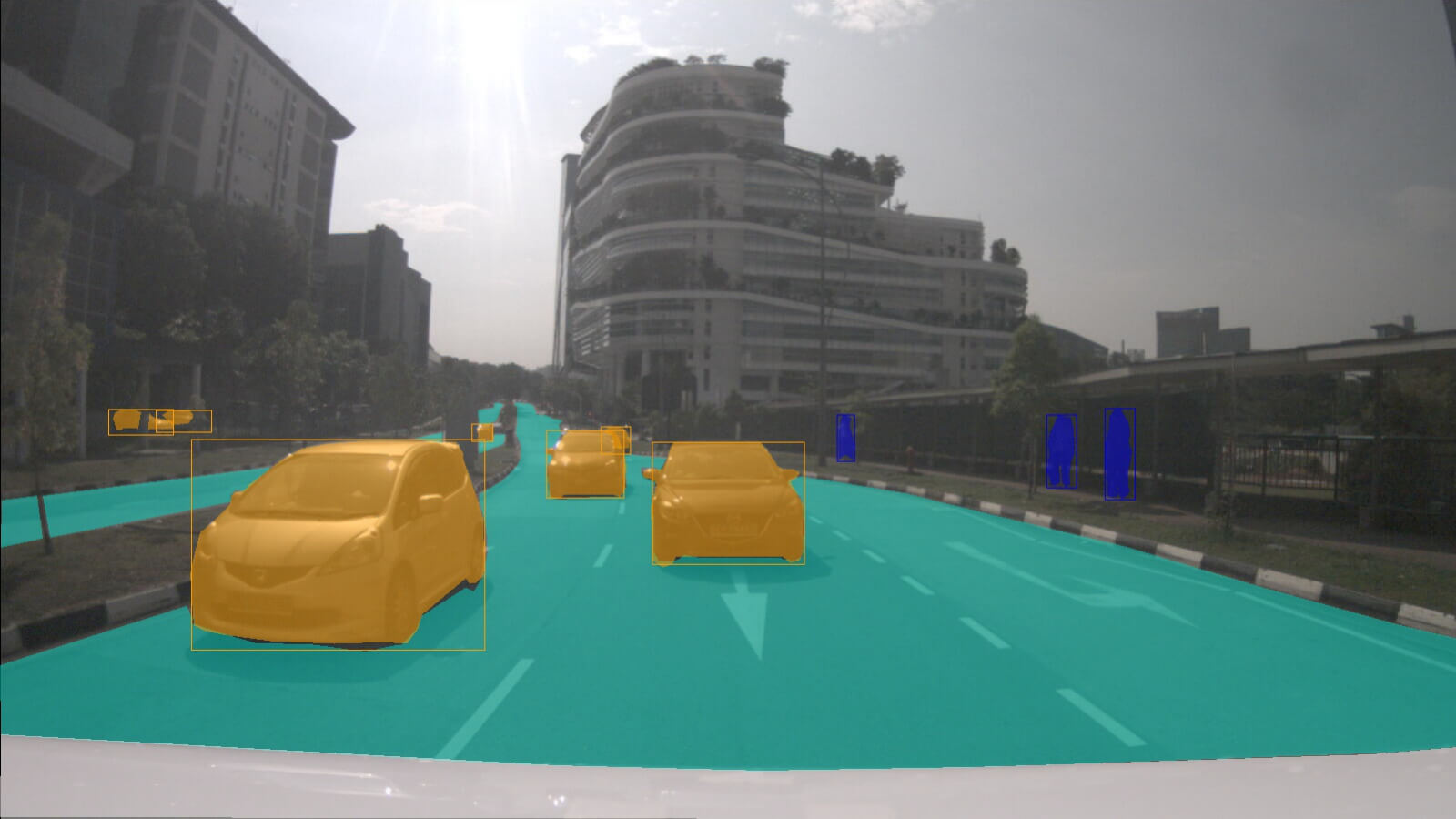

ApolloScape’s A*3D dataset focuses on 3D instance segmentation and large-scale outdoor scenes. It features dense point cloud annotations, lane markings, and drivable area segmentation, all collected in dynamic, often congested urban environments.

It addresses key perception challenges such as occlusion, overlapping traffic participants, and varied road infrastructure. With over 20,000 frames and a wide geographic scope, A*3D supports in-depth training and evaluation for vision-centric driving systems.

These datasets are more than just training material. They shape how we benchmark progress, design experiments, and evaluate autonomous vehicle performance across diverse real-world conditions. Whether you're focused on 3D detection, trajectory prediction, or sensor fusion, starting with the right dataset can influence the quality and reliability of your entire pipeline.

As the field evolves, so will the datasets. But for now, these five provide the strongest foundation for those looking to build, test, or innovate in the world of self-driving technology.