Contents

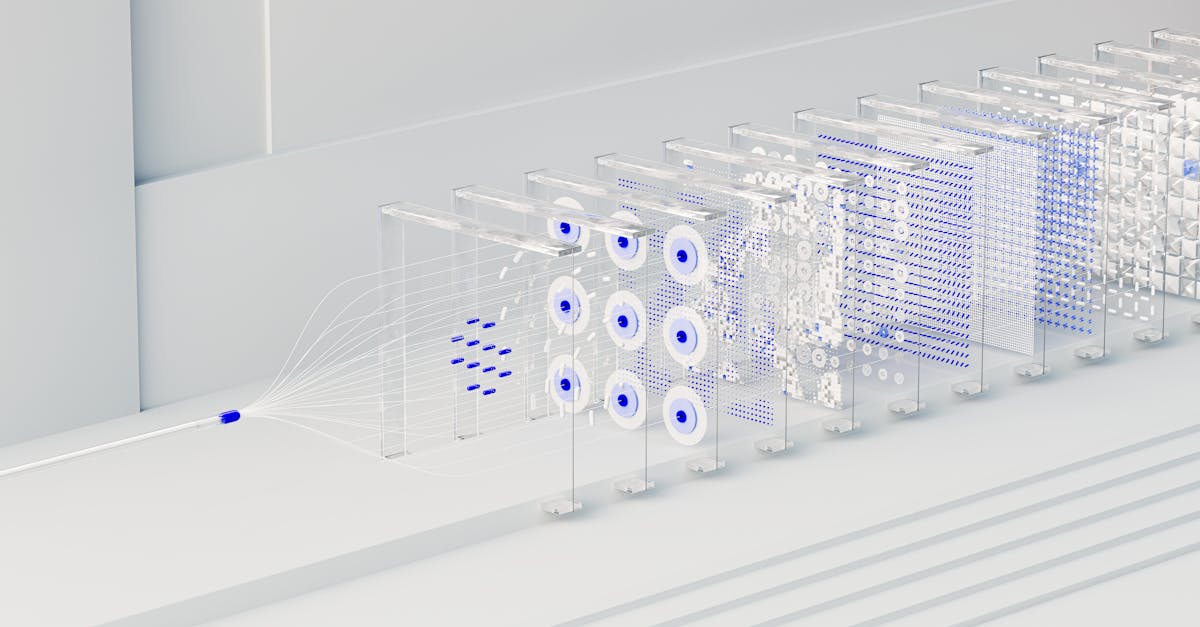

Machine learning can feel like a black box: models give predictions, but how do they arrive there? If you build products, analyze data, or need to evaluate model output, you deserve clear, actionable explanations. This article walks through real examples that illuminate how different models learn, where they succeed, and where they fail.

Abstract descriptions are useful, but concrete examples teach faster. When you trace a model through a real dataset, you learn to spot common pitfalls like bias, overfitting, and misaligned objectives. These are the problems that cost time and ad dollars in production systems.

Key idea: models are mathematical functions trained on examples; understanding those examples clarifies model behavior.

Consider a classic problem: predicting housing prices from features like square footage, number of bedrooms, and neighborhood. A linear regression model approximates price as a weighted sum of features. That simplicity makes the model interpretable, but also limited.

Data: features include area, bedrooms, age, and proximity to transit.

Model: linear regression or a tree-based regressor like Random Forest.

Objective: minimize mean squared error between predicted and actual prices.

Walkthrough: split data into training and validation sets, fit a linear model, then examine residuals. If residuals grow with price, the model underfits high-end properties. If residuals cluster by neighborhood, a missing categorical feature is to blame.

Actionable checks:

Plot predicted vs actual; look for systematic deviation.

Analyze residuals by feature to find missing interactions.

Try a tree-based model when relationships are nonlinear.

Fraud detection is a high-stakes classification task with class imbalance and concept drift. Models that work initially can degrade as fraudsters adapt. Real examples highlight the importance of evaluation metrics beyond accuracy.

Example setup: model predicts fraud probability; the dataset has 0.5% fraudulent transactions.

Use precision-recall curves rather than accuracy.

Prioritize recall within the budget of false positives the operations team can handle.

Implement online monitoring to detect drifts in feature distributions.

Practical technique: calibrate predicted probabilities with methods like isotonic regression so scores map to reliable risk buckets. This lets analysts set thresholds tied to operational costs.

Decision trees split data by feature thresholds, producing human-readable rules. They excel when features have clear thresholds, but single trees can overfit.

When to pick trees: you need interpretable decision rules, want to visualize splits, or have categorical features with meaningful thresholds.

"If a model can't explain its decisions, it's hard to fix or trust it when things go wrong."

To reduce variance, ensemble trees into Random Forests or Gradient Boosted Trees. These ensemble methods usually provide better accuracy, and tools like feature importance and SHAP values help recover interpretability.

Neural networks shine on high-dimensional inputs such as images. Convolutional Neural Networks (CNNs) learn spatial filters that detect edges, textures, and objects.

Real example: training a CNN to classify images of damaged vs intact products on an assembly line.

Collect a balanced dataset with representative lighting and angles.

Use data augmentation (rotation, brightness) to improve generalization.

Monitor validation loss and use early stopping to avoid overfitting.

Diagnostics: visualize activation maps or Grad-CAM heatmaps to confirm the network focuses on defects rather than irrelevant features like labels printed on packaging.

# Example pseudocode training loop:

model = build_cnn()

for epoch in range(epochs):

for batch in train_loader:

preds = model(batch['images'])

loss = loss_fn(preds, batch['labels'])

loss.backward()

optimizer.step() When labels are unavailable, clustering techniques reveal structure. K-means partitions data into groups, while density-based methods find arbitrarily shaped clusters.

Real use case: segment customers by purchase frequency, average order value, and product categories to design tailored campaigns.

Preprocess numeric features with scaling.

Use dimensionality reduction (PCA or UMAP) to visualize clusters.

Select cluster count with silhouette score or by business interpretability.

Tip: combine clustering with supervised learning: predict cluster membership to automate future segmentation on new users.

Metrics must reflect business goals. For churn models, true positives may save months of revenue; for search ranking, small relevance improvements shift engagement.

Classification: prefer precision-recall or AUC depending on class balance.

Regression: use mean absolute error when outliers are common.

Ranking: use NDCG or MAP for relevance tasks.

Always translate a metric into business impact: what does a 1% lift in recall mean for cost or revenue?

Models reflect training data. If historical data encodes bias, models will reproduce it. Real examples—loan approvals or hiring filters—show why audits and counterfactual checks matter.

Practical steps:

Measure performance across demographic groups.

Use reweighting or adversarial debiasing when necessary.

Document data provenance and label processes for transparency.

"A model that performs well on average but poorly on a subgroup can create real harm and regulatory risk."

Deployment is when models meet reality. Monitor prediction distributions, input feature drift, and business KPIs. Implement automated alerts and safe rollback procedures.

Checklist for production readiness:

Define service-level objectives (latency, availability).

Set up model performance dashboards with cohort analysis.

Build a retraining pipeline triggered by drift or schedule.

When decisions must be explained, use model-agnostic tools like LIME or SHAP, or prefer inherently interpretable models such as generalized additive models (GAMs).

LIME explains local behavior by approximating the model near a prediction.

SHAP assigns additive contributions that sum to the prediction.

Partial dependence plots reveal marginal effects of features.

These techniques help validate that models use sensible signals. For example, in medical imaging, SHAP can show whether a model relies on artifacts rather than pathology.

Start reproducing examples with established libraries and explainability tools. Practical learning comes from experimentation.

Use scikit-learn for classical models and pipelines.

Train deep models with TensorFlow or PyTorch for flexible experimentation.

Explore research and tutorials on arXiv for state-of-the-art methods.

Encounter a model that performs oddly? Here are specific diagnostics and fixes drawn from real projects.

Symptom: validation accuracy much lower than training. Fix: add regularization, reduce model capacity, or gather more labeled data.

Symptom: model performs well overall but fails on a subgroup. Fix: augment dataset for that subgroup or apply fairness-aware retraining.

Symptom: predictions drifting over time. Fix: implement feature drift detection and schedule retraining.

Which model should I try first? Start with a simple baseline: linear models for numeric prediction, logistic regression for binary classification, and k-means for segmentation. Baselines reveal whether complexity is necessary.

How much data do I need? It depends on signal complexity. For tabular problems, thousands of labeled examples often suffice. For image or language tasks, tens of thousands may be needed for high performance.

How do I prevent overfitting? Use cross-validation, regularization, early stopping, and simpler models when data is limited.

Understanding models means studying how they behave on real data, not just reading theory. Walk through examples: inspect residuals for regressors, analyze precision-recall trade-offs for imbalanced classifiers, visualize activations for neural networks, and monitor deployed models for drift.

Key takeaways:

Match model choice to the problem and the need for interpretability.

Use diagnostics like residual plots, calibration curves, and feature importance to validate behavior.

Translate metrics into business impact before production deployment.

Plan monitoring and retraining to maintain performance over time.

Start implementing these strategies this week by evaluating an existing model with one new diagnostic: plot residuals or a calibration curve and note what it reveals about your model's blind spots. That single exercise often surfaces the highest-leverage improvements.