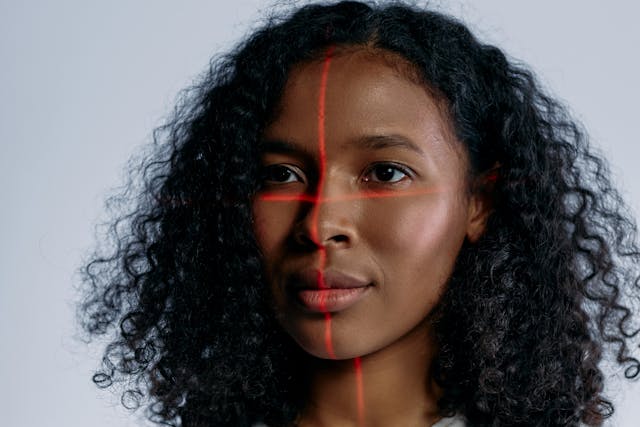

When we talk about bias in computer vision, specifically in the context of face recognition and related technologies, we refer to systematic differences in performance across demographic groups. These differences often emerge along lines of skin tone, gender, age, and other attributes—even when the system is correctly built from a technical standpoint.

Studies show that many commercial facial recognition systems have far higher error rates for women and people of color than for light-skinned men.

These disparities are not just statistical curiosities. They reflect how the data, the model design, and the deployment context interact to produce outcomes that disadvantage certain groups.

A foundational study by Joy Buolamwini and Timnit Gebru demonstrated that facial-analysis systems from major companies were accurate at around 0.8 % error for lighter-skinned men, but shot up to nearly 35 % error for darker-skinned women.

From a conceptual point of view, bias enters when the representation of groups in the training data is uneven, when feature extraction or design assumptions embed normative reference groups (often light-skinned male faces), or when deployment contexts assume that one model fits all.

Recognizing bias starts with acknowledging difference in error: any system that handles people must ask which people it works best for, and which people may be left behind.

Several inter‐linked factors cause the “vision bias” problem. First is data imbalance. Many large face-image datasets used for training and benchmarking contain predominantly light-skinned or male faces, under-representing women or people with darker skin tones. This means the model has less experience on those faces and thus tends to perform worse (MIT Media Lab).

Second, commercial models often optimize for overall accuracy rather than fairness across subgroups. A high global accuracy can hide vast disparities: for instance, the National Institute of Standards and Technology (NIST) found that many algorithms had false positive rates that varied by a factor of ten or more between demographic groups (CSIS).

Third, real-world deployment introduces further risk. If a biased system is used in high-stakes contexts (law-enforcement, border control, job assessments), the consequences for those misidentified or overlooked are grave. For example, mis-identification rates higher for minorities can exacerbate existing social inequalities and discrimination (Amnesty International Canada).

Therefore, the risk is not only technical: it is social and ethical. A facial recognition system that works well for one group but poorly for another can reinforce or amplify unfair treatment.

The practical effects can include wrongful arrests, unequal access, mis-service, and distrust in the technology.

Mitigating bias in computer vision requires more than better code. It begins with better data.

One major approach is dataset improvement: ensuring training and benchmarking data reflect the full diversity of people the system will see. This means actively collecting images across skin tones, genders, ages, and other relevant attributes.

Another is algorithmic auditing and fairness measurement. Developers and deployers can test models on subgroup performance (e.g., separate accuracy for darker-skinned women vs lighter-skinned men) and track error rates by demographic. The NIST study provides a template for this (NIST).

Third, deployment-aware governance matters. Even a well-balanced model can be misused if the context ignores fairness: decisions should account for false positives and false negatives differently across groups, and use of these systems needs transparency, human oversight, and rights to challenge outcomes.

Finally, the broader lens is one of ongoing reflection. Bias in vision is not a one-time fix. It evolves as datasets change, as uses evolve, and as social norms shift. An open conversation can encourage tech professionals, policy-makers, and the general public to ask:

Who is the system built for?

Who might it miss?

And how will we know when it works fairly?

Understanding bias in vision is not about blaming technology—it is about recognizing that when machines “see,” they carry the imprint of human choices.

By explaining how and why these biases arise, and what can be done to address them, we can move toward vision systems that serve everyone more equally and responsibly.